Minimax for Gomoku (Connect Five)

Refresher #

If you all remember from the last post, we used depth-first search to solve the game of Tic Tac Toe, recursively traversing all the possible branches and finding the outcome given optimal play. Since we began from the bottom, we were able to tell if a move was winning, losing, or tying and then work our way up, playing optimal moves for each player. This made the solution very simple for the following reasons:

- We didn’t need to store or create a game tree of any sort

- We only had to detect wins and losses (This will become clearer after learning other algorithms)

However, it’s major flaw made it impractical for even slightly more complex games—It’s complexity increases exponentially with both the branching factor and the depth, making it take literally years to run for Connect Four on a normal computer.

Minimax #

The most basic solution to this problem is actually another for of depth-first search, except this time, instead of searching to the end of the game, you only search to a certain depth. This brings up the additional complexity in minimax, as an evaluation function is required to assess how good each position is. Instead of returning a 1, -1, or 0 depending on if the game is a win, loss, or tie, you might return a 0.8, -0.25, or a 0.001 based off of the output of your heuristic evaluation function.

What do I mean?

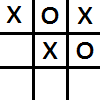

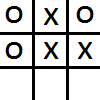

Take the following Tic Tac Toe position for example:

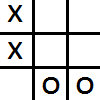

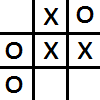

No matter whose turn it is, the X’s are going to win. The analysis function should return a very positive value for the X’s. However, the player’s turn still plays a very important role in analysis functions. For example:

Without looking at the player’s turn, the position would look completely tied, but knowing that X’s start and it is therefore their turn, it is clear that X’s can win in the next move. Your evaluation function should reflect this, and submit a very high positive score for the X’s, similar to the score in the first position.

You should already have some form of idea on how to score positions for Gomoku. It should take into account the following factors:

- How many sets of consecutive squares you control in a row

- How long are each of those sets

- Whose turn it is

- How many open ends each set contains (For example: if you control two consecutive positions with 0 open ends, there is no chance those two positions will become a five-in-a-row, and therefore should no be awarded any points)

Note that you should count up the sets in all directions (vertically/horizontally/diagonally) so that some squares may be counted for multiple different sets.

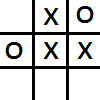

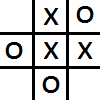

I understand this may be hard to visualize, so let’s use the Tic Tac Toe analogy again:

You would count the following sets for X:

- Horizontal:

- one X with one open end

- two X’s with 0 open ends

- Vertical:

- two X’s with 1 open end

- one X with one open end

- Diagonal (top-left to bottom-right):

- two X’s with 0 open ends

- one X with two open ends

- Diagonal (top-right to bottom-left):

- one X with 0 open ends

- one X with one open end

- one X with one open end

For Gomoku, this may be implemented in a similar method:

function gomokuShapeScore(consecutive, openEnds, currentTurn) {

if (openEnds == 0 && consecutive < 5)

return 0;

switch (consecutive) {

case 4:

switch (openEnds) {

case 1:

if (currentTurn)

return 100000000;

return 50;

case 2:

if (currentTurn)

return 100000000;

return 500000;

}

case 3:

switch (openEnds) {

case 1:

if (currentTurn)

return 7;

return 5;

case 2:

if (currentTurn)

return 10000;

return 50;

}

case 2:

switch (openEnds) {

case 1:

return 2;

case 2:

return 5;

}

case 1:

switch (openEnds) {

case 1:

return 0.5;

case 2:

return 1;

}

default:

return 200000000;

}

}As you can see, this function takes the number consecutive, number of open ends, and turn into account. It gives much more points to a 4 with open ends when it is your turn than when it isn’t. Also notice how it gives 0 points to any shape that has no open ends. The weights for such a function are in practice chosen empirically.

There is still another major piece missing however—the means of finding all the sets and passing them to the set-scoring function. Consider the following implementation for counting horizontal sets:

function analyzeHorizontalSetsForBlack(current_turn) {

var score = 0;

var countConsecutive = 0;

var openEnds = 0;

for (var i = 0; i < board.length; i++) {

for (var a = 0; a < board[i].length; a++) {

if (board[i][a] == 'B')

countConsecutive++;

else if (board[i][a] == ' ' && countConsecutive > 0) {

openEnds++;

score += gomokuShapeScore(countConsecutive,

openEnds, current_turn == 'black');

countConsecutive = 0;

openEnds = 1;

}

else if (board[i][a] == ' ')

openEnds = 1;

else if (countConsecutive > 0) {

score += gomokuShapeScore(countConsecutive,

openEnds, current_turn == 'black');

countConsecutive = 0;

openEnds = 0;

}

else openEnds = 0;

}

if (countConsecutive > 0)

score += gomokuShapeScore(countConsecutive,

openEnds, current_turn == 'black');

countConsecutive = 0;

openEnds = 0;

}

return score;

}Of course, this is just one possible implementation, and is in no way the fastest. In addition, it only analyzes for black, and horizontally—a real implementation should be able to handle both colors and in all directions. You would subtract or divide the points of one player by the points of the other. However, this function should still give you an idea on the kind of function that is required for summing up all the sets.

Now that we can build our analysis function, we still need to use a minimax algorithm to implement it. As a quick refresher, the idea is similar to depth-first search in that we go branch by branch having each player try to maximize their outcome. However, instead of going all the way to the end of the game, we cut the function off at a specific depth, and use our analysis function to tell how good that position is.

At depth 1, you would simply consider all the board positions once you play one move, and choose the one that is the most favorable for you.

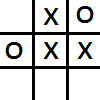

Given the same position above:

The children to be analyzed would be:

Note that during your analysis, you assume it is your opponent’s turn, not your turn! This will make the third child be clearly the most favorable, since in all other children, X has a two in a row with an open space (since it will also be their turn, your analysis function could give that a very high score). In this manor, you have successfully blocked their win.

Improving this is easy. We are currently only looking at a depth of 1, or of 1 ply (plies are used to indicate depth—five ‘plies’ means five turns deep). We could make our AI much stronger by looking at a depth of two plies, consisting of one of your moves and one of their responses (reply). This explains the name ‘Minimax’, while you are trying to maximize your points, your opponent is trying to minimize your points—out of all the possible minimizes you opponents can make in their replies, you chose the maximum, most favorable one for you, and play the corresponding move. You try to make the maximum out of your opponent’s minimums. Of course, increasing the depth past two plies is trivial, as you just need to do more of the same thing.

Here is a very basic example of this in javascript:

function bestGomokuMove(bturn, depth) {

var color = bturn ? 'B':'W';

var xBest = -1, yBest = -1;

var bestScore = bturn ? -1000000000:1000000000;

var analysis, response;

var analTurn = depth % 2 === 0 ? bturn:!bturn;

var moves = get_moves();

for (var i = moves.length-1; i > moves.length - aiMoveCheck - 1

&& i >= 0; i--) {

board[moves[i][1]][moves[i][2]] = color;

if (depth == 1)

analysis = analyzeGomoku(analTurn);

else {

response = bestGomokuMove(!bturn, depth - 1);

analysis = response[2];

}

board[moves[i][1]][moves[i][2]] = ' ';

if ((analysis > bestScore && bturn) ||

(analysis < bestScore && !bturn)) {

bestScore = analysis;

xBest = moves[i][1];

yBest = moves[i][2];

}

}

return [xBest, yBest, bestScore];

}As you can see, in every ply the player tries maximizing their own gains and minimizing the gains of their opponents. You will notice the similarity between this algorithm that the depth-first search one in the previous blog post.

You can build a pretty reasonable AI using this pure minimax algorithm, though there are still many improvements that can be made, that I’ll perhaps cover in a future post. Try playing my own Gomoku AI!